A Science of Change

Concluding chapter in To Live is to Change. Language, History and Anticipation by Frederic ChordaÌ€ Dedicated to the work of Mihai Nadin Barcelona: Anthropos Editorial – Nariño, August 2010 PDF Introduction “Only dead fish go with the flow.” This is one of the “Nadinisms,” that pop up on quite a number of websites. Readers who […]

Concluding chapter in

To Live is to Change. Language, History and Anticipation

by Frederic Chordà Dedicated to the work of Mihai Nadin

Barcelona: Anthropos Editorial – Nariño, August 2010

PDF

Introduction

“Only dead fish go with the flow.” This is one of the “Nadinisms,” that pop up on quite a number of websites. Readers who find some of my formulations worth their interest collect them from my various writings. (This interest led me to make my own selection and post it on my Website (www.nadin.ws). Sure, taken out of context, this sentence, like any other expression in language, means different things to different people. I would not be surprised if some of those quoting it (with or without attribution) turn what I meant—opportunism is a dead end—into its opposite. “…twisted by knaves,” as Rudyard Kipling put it in his famous poem If.1 Good ideas have many fathers. Originality eventually percolates and, regardless of attribution, achieves recognition one way or another. Scientific predictions, often adopted without attribution, have a similar destiny.

To explain one’s own work is not easier than to perform the work itself. As Frederic ChordaÌ and Mr. Ramon Gabarros graciously shared with me their intention to publish Vivir es Cambiar (To Live is to Change, in which Professor Frederic Chorda presents my ideas on the post-literate, post-industrial age, and on anticipatory systems/anticipation to the Spanish-speaking public ), I accepted to provide my own understanding of what my work, still (and always) in progress, wanted to accomplish. To begin: Do not expect that I rehash my publications; even less, do not expect that I align with the predominant writings of these very unsettled times. As you read my text, please realize that I am only your sherpa. Reaching the peak that you set for yourself will be your own success. (Don’t shoot the sherpa if instead of climbing you prefer strolling through shopping malls.) Within this text, which should make clear that the goal of my research is to provide a foundation on which to base practical endeavors, I will bring up a few themes. One is the new condition of science—from predictive to explanatory science. I will define dynamics, and I will infer from change to our own existence. Addressing the Cartesian foundation of Western civilization, I shall discuss the shortcomings of the most famous method ever. In turn, I shall suggest that a complementary perspective that integrates deterministic reaction and non- deterministic anticipation could better guide us in the new stage we have reached—the civilization of many literacies.

As I worked on this text, it became clear that the unifying theme of my entire work—which integrates semiotics, aesthetics, cognitive science, the new mathematics of computer-based inquiry, and other disciplines (philosophy, design, artificial intelligence, artificial life)—is change. Since I do not ever repeat any of my classes, or any of my lectures, I took this opportunity to add some new thoughts to my work instead of summarizing it.

Prediction and explanation

No science is better than the predictions it affords. But for the last 50 years, science has started to give up even the desire to predict in favor of producing explanations. In the absence of prediction, good explanations—such as how matter behaves at nanoscale (which is the smallest known to date)—deserve increasing respect. Unfortunately, more and more frequently we get neither good predictions nor acceptable explanations. For example, the lack of prediction in respect to the latest financial crisis (recognized as such since 2007) is indicative of the scientific condition of economics and of economists: low predictive performance and unsatisfactory explanations, not to mention mediocre ideas for addressing the situation. Even after economics adopted brain imaging in order to lend plausibility to its assertions, it is difficult to watch the melting of financial markets and still trust the field. The fact that, instead of their decoupling, we see economic theory fused together with politics and driven by political opportunism makes the situation even worse. Weather prediction, or earthquake prediction—on which lives depend— are much better than they used to be. Nevertheless, if you think about the huge investment made with the hope of improving predictions—we measure more and more, using incredibly expensive technology—the return on the investment is as low as on playing the lottery. “Are weather or earthquake predictions possible at all?” is a better question than “What else should we measure?” An even better question is “Why is it that natural processes depend on few data, but we continue to measure more and more, instead of looking for the essential?” Chasing after a moving target is not easy.

If we focus on how things take place, instead of finding out why things take place, we will continue to expand the number of parameters we quantify. Moreover: the Why? question is focused on knowledge. The How? question is focused on procedures, i.e., on the expectation that we can emulate the process we attempt to explain. The Why? question brings up causality in a manner that transcends the cause-and-effect sequence. The How? question limits us to cause-and-effect understood in a limited way. All the might of the scientific guns driven by a delusional understanding of causality will not suffice for making science-based predictions much better than they are. Also, they will not deliver better explanations of what has already happened (earthquakes, tornados, epidemics, market crashes, etc.). Moreover, as we shall see, while prediction is important, what really matters is anticipation. My current subject of research is anticipation. The output of this research is the understanding of what is needed to live in a challenging world in order to overcome danger, not by predicting, but by performing successfully no matter what happens.

Let me explain this by way of another example. The prediction of an earthquake might allow for a reduction of damage related to it (disconnect electric lines to avoid fires from short circuits; disconnect distribution of water and gas to avoid damage related to broken pipes; limit traffic to a minimum, etc.). All these measures assume that somehow science can name a date, time, and place for dangerous seismic activity. Essential is the anticipation at work: people who can avoid hurt by placing themselves in ways that will diminish physical exposure; people who can perform above their abilities under the pressure of events. The example of Japan’s preparation of its population for such possible events is instructive. It facilitates learning, which, after all, is the source of anticipation.

My research in anticipation does not fit into one of the many boxes labeled as the sciences of our age. I don’t research chemistry; I am not in physics or nanotechnology; I would not qualify as a meteorologist, not even as a psychologist or linguist, even less as an economist. The predictions implicit or explicit in my scientific hypotheses are not confined to these or other sciences; neither are explanations based on my view of the world. In other words, I have not discovered a method for predicting the performance of the stock market, just as I have not invented weapons for winning the war on terror (whatever such a war might be), or a way to heal cancer. Neither have I conceived of a device for predicting weather or earthquakes. The only claim I can make is “I am a theoretician”—in an age when this qualifier is regarded with some disdain. Everyone wants results, not theories. The predictive and explanatory dimensions of my theories correspond to the integrated perspective from which I consider the subject of my inquiry.

While I respect the extreme specialization that has allowed friends and colleagues to probe deep into the subjects of their interest, my goal is to understand the whole, as an expression of integration (of parts, interactions, the relation to the rest of the world). More precisely, I am interested in the changes through which what is—today’s world, for example—becomes what will be—the world of tomorrow. Or: How will a stem cell unfold to become the individual that each of us is? Or: How does the still non-existent become new realities in science and art? In other words: What is creativity? By creativity I understand the change from what is to what never before existed. In other words, another subject matter of mine is dynamics. The desire to know why things change is the main explanation for my inquiry. What affects change, how change is expressed, how we cope with change—to list a few clear questions—are related to my focus. I am my questions.

Traditionally (since 1932, if you consider H.G. Wells’ Foresight; or since the mid-1940s, if you consider Flectheim’s futurology; or the mid-1960s, if you consider Future Studies in the work of Kahn, Gabor, and Bell, among others), the domain associated with change has been called Future Studies, to which my scientific interests do not belong. Given the speculative nature of the endeavor, I am quite adverse to Future Studies. It never predicted, for example, the change from the classical structure of war—with its well-defined roles and clear distinctions between aggressor and defender—to the new wars, of multiple roles, in which victim and perpetrator (sometimes called “terrorist”) are sometimes difficult to define. This was one of my predictions, and I am not sure that it gives me satisfaction to see it validated (cf. Civilization of Illiteracy, pp. 663-686). Neither did Future Studies predict the faster cycles of innovation and production we have been experiencing, or the accelerated sequence of crises (economic, political, moral, etc.) that confront us. My own predictions of faster cycles of change did not prepare me for coping with rapid innovation (or what is presented as such) and crises.

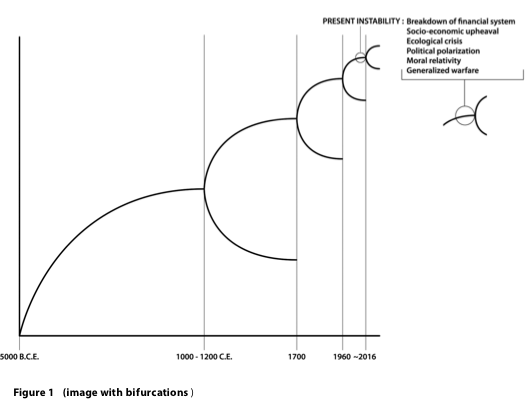

In looking at the dynamics of human history, I realized that each succeeding cycle of change was shorter than the one leading up to it. This thought was inspired by Mitchell Feigenbaum’s research on bifurcations in nature. Picture the branching of a tree. What we also know from the study of dynamic systems is that the time preceding a bifurcation is a time of instability. My prediction of a bifurcation around the year 2016 makes the current instability appear as the preliminary stage. The current instability is defined by breakdown of the financial system; socio-economic upheaval; political polarization; moral relativity; generalized warfare; ecological crisis, (among others).

Science has kept searching for the better “hammer,” for a world in which all there is can be reduced to “hammering nails.” My interest remained focused on answers that were not based on determinism. In other words, on answers that go beyond “The hammer hits the nail; the nail penetrates the wood,” i.e., cause-and effect determinism. Indeed, my interest lies in answers to question such as: “How much force is needed to get the right grip on the hammer?” and “How much force must be applied in the act of hammering to make it successful?” And even more: “Why was the hammer invented?” Better said, “Why do we need a hammer?” It goes without saying that most scientists flow in a direction opposite to mine.

How many times have you read about the discovery of the gene connected to one or another disease, only to learn that this valuable knowledge does not translate into a cure? To find the gene associated with a certain disease, the pill that will cure obesity, the tennis racquet that will make each of us a champion, the investment scheme that will make us rich is what drives scientists, economists, and politicians unable or unwilling to understand how much more complex causality is than that. There is more to a disease—be it cancer, or Alzheimer’s, or baldness—than the gene associated with it; there is more to successful tennis than a racquet, etc., etc. Deterministic predictions are close to perfect when they refer to the realm of physics. The entire space exploration program—extremely successful so far—is based on such predictions. All of us were educated in the expectation that determinism can explain everything. From this derives the human hope that a “hammer” or “magic bullet” or “secret formula” will be discovered, or invented, or designed, that will cure disease, help us eat and drink all we want without gaining weight or raising our cholesterol level, help us become famous athletes, artists, or mathematicians, enable us to live forever (or close to it). Our understanding of science entails an implicit romantic assumption that science is always a positive assertion, a promise of progress. The prediction implicit in my work—that deterministic science will not solve the problems confronting humanity—does not go with the flow.

It should be added here that even progress along the deterministic view of the world has been questioned at times. So many intellectuals (and not only) predicted that airplanes will never fly (e.g., Nobel laureate Lord Kelvin, and astronomer Simon Newcomb); that a rocket would never leave earth’s atmosphere (cf. New York Times, 1936); that television would not last (movie producer Darryl Zanuck, 1946; actress Mary Somerville, 1948). My position does not echo their skepticism. Each time someone proclaims something to be impossible, we should be alerted to ignorance, dogmatism, lack of vision. While scientists do not reject anticipation as a subject, the dominant attitude is condescension: “You will return to us eventually, since we own everything necessary to practice science.” Hubristic predictions—and mine might be suspected of hubris—are not better. I am still amused by Marvin Minsky’s predictions of 1970 concerning the status of artificial intelligence 20 years hence, and which never materialized. Kurzweil’s singularity might also turn out more hubris than fact.

What speaks in favor of anticipation is that it is not a step in the same direction, but in a fundamentally different direction. A little bit of argument for this: Infatuation with growth that corresponds to continuation at higher levels translates into the expectation of having more and more, regardless of the price this entails. As industrial society advanced, it needed more energy. The waterfall, the wind (and the associated turbines) were not danger-free, but by far less consequential than burning coal or oil, not to mention atomic reactions, in order to drive the steam engine. The determinism embodied in the underlying science (hydrodynamics, aerodynamics, thermodynamics, nuclear physics) led to “bigger hammers,” but not to the understanding of what can happen when the “hammer” gets too big and might adversely affect the lives of those using it. Along the same line, even the best intentions carried out within the deterministic model can result in less than desired consequences. The poor need more— food, healthcare, clothing, shelter, education, access to technology, for example. Forcing others, by law or otherwise, to give up some of their privileges is a possible reaction. It is not incorrect to infer from the needs of the poor to the obligation of those who have more to share. But it is limiting. Both poor and rich are affected by the fact that growth at any price is consequential beyond consumption. Pollution, resource exhaustion, disease, cognitive and physical decadence, and global warming affect the poor more than they do the fortunate.

Determinism is ultimately an action-reaction chain. As the complexity of life increases, reaction will not do. Throwing more money at the AIDS problem has not resulted in fewer cases of the disease. Handing out medicine to keep AIDS in check does not deal with the main cause (irresponsible sexual behavior). Making cheap mortgages available to people who cannot afford a house did not give them the housing they needed, but rather exposed them to speculation. We need a different perspective to current and future problems that integrates all components.

We need to see the complex whole, not only the successes (i.e., technological breakthroughs, as in burning coal, exploring for more oil fields, mastering atomic reactions). Increased energy use affected the human condition in many ways and made life so much more pleasant. But it also generated dependencies, and false expectations. An anticipation-based perspective is never a call back to the past, rather an invitation to consider the richness of possibilities still open. We cannot get the energy we need from waterfalls and dams. (I will not discuss here China’s relocation of millions of people in order to create the biggest dam in the world on the Yangtze River and what the unanticipated results might be.) We cannot get it from wind, even if we filled the world with windmills. There is the sun. And there are many other possibilities. But determinism always looks for the easiest path, not the most appropriate. Anticipation introduces awareness of possibilities, and the expectation of responsible choices that transcend the immediacy of satisfaction at no matter what price.

While prediction legitimizes science (and makes explanations possible), misunderstood predictions can raise false hopes or, worse yet, lead to incorrect interpretations of dynamics. Microsoft, the giant corporation based on an industrial model belonging to the past, should actually not exist in the context in which new forms of work and new conduits for creativity emerge. (That would be the conclusion in my book, The Civilization of Illiteracy.) The broad image that informed this understanding of change—from industries whose engines were driven by water, steam, and burning coal and oil to the digital engine driven by knowledge—is a prediction that resulted from uncovering the underlying structure of work and life in the digital age. At face value, this prediction is far from being confirmed. Microsoft is bigger, but by no means better, than at the time this prediction was articulated. But just to put things in perspective, let us take note of the fact that inside Microsoft, the company split into very competitive units that are not permanent. Once a project is carried out, the team members compete for a spot on new teams, or generate new projects. The younger company called Google goes even further: Each of its employees has full control over 20% of his work time, during which he can initiate his own projects. Not confirmed was my prediction that a chess program could win over a player only if it integrated some anticipatory functions. IBM’s Big Blue, which beat the world champion (Kasparov) had no anticipatory function, but a lot of brute force. It turns out that, like nature, we can succeed by “throwing” an immense amount of resources at a problem (as the yucca plant survives by enlarging its base when it is covered by the white sand dunes of New Mexico). But is this the most efficient way? The sub-prime mortgage crisis in the USA, which affected the whole world, led to government and private actions that are another example of this strategy. Money (borrowed from China, Singapore, Dubai, etc.) was “thrown” at the public, in order to keep consumption up, and at the banks, to save the financial system (despite the fact that it created the whole mess).

Once the scale of such resources become by many orders of magnitude larger than the space of possibilities, chances are that the volume of guessing is so large that guessing takes on the appearance of anticipation. Here is an example of what I mean: I have a safe which opens when the digits 4791 are aligned on four tumblers with numbers from 0 to 9. If one person who does not know the code wants to open the safe, he will have to play with all the possible combinations of numbers until he hits on 4791. If ten people work at finding the combination, the process will go much faster; and if 10,000 try in parallel, they will find the combination almost immediately—due to the brute force of the number of persons applied to the problem, which is broken into smaller packages for each one person, so each one can find the solution more quickly. It only seems like anticipation because, in fact, each person guessed at combinations until the right one was found. They were not selecting from the realm of possibilities. In the case of Big Blue vs. Kasparov, the computer did not choose from among the realm of Kasparov’s possible moves, but by going through every move in the computer’s databank.

It is with this understanding of the predictive legitimacy of science that I want to explain, in the simplest terms, what is the modest knowledge contributed by my research to the understanding of change.

We are what we are, because everything changes

If things—whatever—were to stay the same, we would not exist! As we know from Heraclitus, nothing stays the same. Only because they change do we need science, in order to understand, and to some degree master, change, our own included.

What are we?

The physicist would answer: Matter.

The chemist, fully agreeing with the physicist, would say: Organic matter.

Biologists would define us within the classifications of their science: Homo sapiens.

The physician: We are anatomy and physiology.

The artist: We are makers of art, as well as beings who, in order to account for our place in the

world, need art.

The mathematician: We are able to abstract from variety to the universality of numbers and

quantitative descriptions of reality.

The geneticist: We are expressions of our DNA.

Computer scientists: We are a computation in progress.

This list can continue, and each definition will reveal a different perspective. Some of these are more general descriptions than others. Some are no more than how one sees the world through the perspective assumed: the eyeglasses we wear (as the metaphor goes); or the microscope with which we examine some details of the world that otherwise we could not see; or the telescope, with its new extensions as satellites and space stations that allow us to see the remote universe; or the computer, our new perspective on everything there is. Is there anything common to these various perspectives? Probably each is correct, but at the same time incomplete. Yes, we are matter, we are organic matter, and we are DNA, we are anatomy and physiology, we are information processing (a bit more sophisticated than computers). But if somehow you, the reader, were living on another planet, knowing the language I use, i.e., the words (“We are matter, we are DNA”) but not having encountered any other human being, could you reconstitute the human being from these descriptions? Better yet: If one or another description (from the physicist, chemist, biologist, computer scientist, etc.) or even all, were placed in a time capsule, would the living subjects of some remote future (let’s say 1000 generations later) be able to identify the human being as we know ourselves to be?

This image illustrates only 3 possible perspectives through which an individual can be described: genetic code/DNA, body temperature, or physical matter. Another description is “We are what we do.” That is, the variety of activities we engage in during our lifetime defines us. When we paint our home, we are house painters; when we exercise, we are runners, walkers, weight-lifters, etc.; when we cut a tree, this practical activity defines our identity during the action.

My answer to the question “What are we?” is: “We are what we do.” And this includes why we do it. The way I arrived at this answer is indicative of the scientific method deployed in my research. To define what makes us what we are, we can refer to experimental evidence accumulated over many centuries. It is this kind of experimental evidence that informs the answer given by physicists, chemists, biologists, physicians, artists, philosophers, etc. They infer from observed, and eventually measured, characteristics to the properties of the entities measured. For the physicist, we human beings, along with stones, trees, stars, water, air, ice, and cosmic gases are nothing but matter—a world made of molecules, atoms, and subatomic particles. Physicists attach to the qualifier “matter” some measurements: weight, density, volume, state (matter is solid, liquid, or gas). All measurements ever performed are a record of how this matter is perceived, moreover, how it changes over time. For the chemist, matter needs to be further defined: Stones are made of matter without life, i.e., inorganic. The identifier “organic matter” corresponds to the subset of the elements making up the living (i.e., a subset of Mendeleev’s table). The notion of the living is itself a construct subject to measurement. For the biologist, the distinctions correspond to other constructs: species, subspecies, etc. For the physician, the definitive characteristics correspond to what a doctor is supposed to do: heal or, better yet, maintain the living in good health. The construct good health becomes a metrics, a “yardstick” against which we measure performance in medical care. Take note of the fact that each definition starts with a construct: something the human being defines. This definition is further applied to the object of our interest. If it fits the definition, it is legitimized as such. If it does not, some other constructs become necessary.

“We are what we do” also means that we are an open-ended process rather than an endpoint. The endpoint, implicit in the process called living turns “We are what we do” into “We are what we have done.” One more thing: “We are what we do” means also that we perceive differences as they affect our own actions. Using a stone to throw at a bird entails the realization of differences in weight, shape, texture, and density, regardless of whether we know what weight, shape, texture, and density mean, that is, whether we learned the labels of these characteristics. Throwing a stone at an enemy entails realizations concerning the body, avoidance, and defense mechanisms, as well as the anticipation that someone might do the same to us.

Experience, learning, constructs

It is very important to understand that in the world we live in, we never meet matter, or organic matter, or molecules, or DNA, or anatomy, or even art (not to be confused with particular human-made artifacts called artworks). Likewise, we never meet numbers, or the famous point (from geometry), the line, the surface, the triangle; we never meet pain, in its generality, or happiness. Pain in its generality means close to nothing. A toothache or lower back pain is concrete. Numbers are constructs; they are identifiers of quantity. The point and the line are geometric abstractions, and so are circles, triangles, and cubes. Pain is something you experience, as is happiness, and as is love. They are impossible to duplicate or produce. We can quantify pain by using other constructs, such as descriptions of faster or slower heartbeat (something hurts so much that the heartbeat changes), of sweat (cold or hot?), or of some actions (if it hurts a little—yet another construct—press softly—another construct; it if hurts a lot, press hard—a lot and hard are also constructs.)

Our actions are what define our own existence in this world, not numbers, circles, concepts, or some of our other constructs. Some actions are natural: breathing, eating, drinking, eliminating waste, reproducing. Others are acquired through interaction with the surrounding environment. A newborn—human or animal—has no experience with any of our constructs. But learning—i.e., acquisition of experience—already starts in the womb, with what is called genetic inheritance. The mother’s body is the first “classroom.” The mother and the future newborn each have a different rhythm, different metabolism, and different interaction with the elements. This “classroom” exposes the fetus to duration, to cycles of light and darkness, to beneficial substances (if the mother eats the right foods) or harmful ones (alcohol, caffeine, drugs). Sooner of later, after birth, the constructs that help us all navigate the universe of our existence are shared. First through actions, later through the labels we use to name them, we learn how to “move” through reality. The fact that Americans keep measuring distances using the more natural foot than the more conventional metric system should simply show that not all constructs are universally shared. If among the constructs that people establish in their activity there are rules of “translation”—like “one foot equals 0.33 meters”—the results of their activity can be shared.

“We are what we do” means no more than that our identity is, at each moment in time, defined through the actions we perform. The label name, or social security number, or whatever we use to refer to ourselves (tall, blond, talkative), or what others call us, does not identify either our sensorial capabilities or our ability to do some things (sing, for instance), or even our inability to do what others do naturally—a blind person is limited in some functions.

A person can be re-presented on his/her driver’s license, attesting to the fact that the person knows how to operate a car, knows the traffic regulations, and is medically fit to drive. A person can be re-presented by his/her fingerprints, biography, and unique signature. On the new Web 2.0 applications of social networking, we are invited to re-present ourselves in a manner that allows others to find shared characteristics.

As you read these lines, your identity is that of a person who, based on a whole set of characteristics (able to see, to read, to select a book from many offerings; affording or taking time, affording the means to access the book, etc.) is at this instance defining himself or herself through this action. You might be a student, a farmer, an unemployed person, or just an accidental reader. All these qualifiers meld into the act you are performing. They also influence how what you do leaves traces: you might take notes; you might make associations with other readings; you might throw the book away, or reflect upon it.

Someone, in the remote past or a second ago, decided to throw a stone at a bird, or to use it to hit something else, or to simply push it aside. The action can be characterized through two outcomes: Does it reach its goal? Does it affect future actions? What we do is predicated by furthering our existence: from the survival needs of a “natural” past to the ever higher expectations of a present, in which survival is replaced by satisfaction, pleasure, or entitlement. More important than the result is the acquired understanding of what makes one action better than another. A successful action without any associated learning is an opportunity that is missed. Learning means to extract from the action that which might lead to a more successful action, or to avoiding circumstances that deem it a failure. In every action, there is the again that emerges, i.e., the possibility to repeat, always with variation, what proved useful, or to avoid what might be harmful. Again conjures re-presentation, more precisely, the ability to derive what is common in some descriptions from what is infinitely different. If our ability to deal with something we encounter—an animal in the forest, a star in the sky, a sound, an odor, a certain tactile sensation, a taste—transcends the concreteness of the encounter—i.e., transcends the immediateness (here and now), we are involved in re-presentation. In simple terms: the scream triggered by pain is not the pain itself, but an expression, a re-presentation of the pain, which means a new presentation of the pain.

Representations are constructs in which the sensorial—what comes to the being through the senses—submits variety (all sensations) and summons the brain to find what is common— what we call patterns. Since we are what we do, the again-ness informing the process of our existence is the expression of learning in the simplest sense of the term. Fires, whether triggered by lightning, by the spark from two pieces of flint struck one against the other, or by modern ways of starting a fire, are different. But they also have some common characteristics. Experience informs human beings not to put their hands into a fire. This experience is acquired. We are not born with the innate sense of danger associated with burning our hands, or with the realization of danger associated with many other experiences: jumping from a moving vehicle; walking into a glass door; walking on a slippery floor, for example. Learning is also the ability to extract what is common—you can get burned if you touch fire—from what is different—each fire is different. The log we throw into a fire eventually burns. It does not accumulate experience. Neither does a package falling from a moving truck, or a piece of furniture thrown through a window. Only the living learns.

I mentioned, without going into details, the change in the conditions of conflict embodied in war. Between the tribal wars of the remote past and the new wars of undefined fronts and the multiple roles of the warriors, the difference is not only of scale, but also of the human condition. In order to articulate such predictions, I examine the specific differences between various stages in the unfolding of humankind. For better or worse, I try to model, in the strictest sense of the word, how a certain activity is affected by fundamentally new circumstances of life and work. This is the foundation of my book, The Civilization of Illiteracy.

The inanimate—as stones, water, coal, the buildings we construct, the machines we make were called for a long time—is made of elements whose properties correspond to their composition. Each inanimate object has a molecular, an atomic, and a subatomic structure. When physicists and chemists describe what makes up an atom or a molecule, what defines an electron or a nucleus, they ascertain an image of homogeneity. All electrons are the same. Based on this observation, the laws of matter apply uniformly: a red stone is as equally subject to gravity as a bag of blue feathers, a hot liquid, or a very cold volume of gas. So are the living, whether a blade of grass, a pink bird, a one-legged woman. Yes, the living is matter, and therefore knowledge about matter and its dynamics applies to it as it applies to inanimate physical reality. But the living, i.e., what was called the animate, is more than its physical embodiment. Understanding the way in which the living is different from inanimate physical reality is what informs my science.

The distinction between the falling of a stone and the falling of a cat is at least informative of the difference I refer to. The stone, dropped from the same position and under the same conditions (e.g., no wind, no rain), will fall the same way over and over again. The cat will never fall the same way even twice. The stone is exclusively subject to the law of gravity. The cat is subject to gravity—in particular, Newton’s law of action-reaction—but it is also capable off anticipating the consequences of its falling, that is, affecting the reaction so that it does not get hurt. The difference between a world subject only to reaction and the world in which reaction and anticipation are simultaneously expressed explains the nature of the predictions that have resulted from my research. The stone remains the same as it falls. The cat adapts: in some ways, it changes shape. The predictions specific to my work are predictions of change.

The monkey leaping from one branch to another is an example of anticipation guiding its actions. If the chosen branch is too weak, or if it were to break, the monkey would be hurt or even lose its life. Scientists “married” to determinism would say that the monkey “calculated” its choices. It turns out that if such a calculus were possible, it would require computational performance far exceeding all the computational capabilities available today. The choices made by monkeys are based on accumulated experience, some of which is transmitted genetically and some of which is acquired through learning.

Where does education fit in this view?

The Industrial Revolution generated uniformity—machines make identical products, be they guns, butter, cars, shoes, books, etc. Within this context, education was destined to become the “industry” of making the same citizens, according to a prototype based on the expectations of the dominant form of activity (in factories, foremost). These are, even in our days, the schools of “mechanical democracy,” in which to be equal (and have equal rights) translates into being the same—homogeneity through sameness as a social ideal. Machines cannot handle differences. The centralized state, the church, the military, and the schools are all expressions of the same industrial need to introduce uniformity into a world of differences. Democracy as such, of ancient extraction, was redefined in the industrial context as an experience based on sameness. My prediction was that the education of our age, of infinite means for distinqishing, will have to shift focus from sameness to differences (see Nadin, Mind—Anticipation and Chaos, The Civilization of Illiteracy, and “Vive la difference”). The reason for this is simple. A limited understanding of literacy in service of industrial society has led schools and universities to focus on taming and sanitizing students’ minds. This conception drove the search for a common denominator, and for an outcome metrics that satisfies the obsession with the growth of society. The “No child left behind” initiative in the USA symbolizes the industrial model par excellence. It demonstrates that what seemed to me to be an inevitable process is, unfortunately, not yet taking place. The education of the future, finally freed from the constraints of “brick-and-mortar” classrooms and age distinctions, will eventually acknowledge differences in aptitudes and interests, and in backgrounds (genetic, cultural, social). More important, it will have to stimulate differences, i.e., what is unique in each learner. Against the background of the current monotony in education, the again-ness cultivated in optimal forms of education cannot be represented by the regurgitation of past knowledge, but by stimulating creativity. Summed up in what became another Nadinism circulated on many Websites, the prediction is based on the observation that “Every known form of energy is the expression of difference, not the result of leveling.”

As radical as this concept of education is, it is not exclusive. All I am arguing for is the effort to complement the “one size fits all” education and the intrinsic understanding of the citizenry as one of never achieved sameness with a learning experience that brings out the diamond from the rough, that makes the best of differences. This type of education would no longer be constrained by the permanent structures (and furniture) we still force on our children today. And it will no longer be driven by the consumerism implicit in the educational foundation. Its focus has to be on change, not on preserving the individual’s dependency on an economy that has to stimulate consumer demand in order to perform. The need to emancipate education from capitalist requirements, expressed in the expectation of consumption as a premise of prosperity, is part of an unavoidable fundamental process. The place to start is the individual. The individual’s talents and aptitudes are critical in a world of limited resources and extreme competition. Instead of being educated in order to become someone else’s capital and source of profit, the individual must acquire the knowledge and skills on which self-determination is based.

It is in this context that creativity becomes the focus. Let us be clear: to create is a verb that people use with a certain reserve (although Americans like to use the verb a lot without really understanding what it means). After all, creation is at the foundation of religion. This is not the place to enter a discussion on religion, church, atheism—all very hot subjects in the more religious USA, but also in the more secular Europe. However, the origin of the word creation as such informs our understandings today. To create is to make something that never existed before. Scientists create; so do artists; so do a husband and wife when a child is conceived. As a matter of fact, the physical world, as well as all our descriptions (constructs) of the laws governing it, is devoid of creation. The living is in a never-ending creation impetus. Education has to account for this characteristic. The typical political demagoguery—more computers, more Internet access, more bandwidth, more cellular networks, more games, and whatever else the market makes available as the digital revolution advances—is symptomatic of the lack of understanding of the new dynamics of human existence. I don’t discard the need for access to science and technology, as I don’t dispute the need to more adequately finance education. I do argue in favor of a foundation based on acknowledging uniqueness, differences, dynamics, creativity, and individual self-determination. Neither more computers nor more games, nor faster Internet access addresses the need to rethink education. Education has to focus on interaction skills, and on understanding the dangers of promoting sameness, of leveling, of stability, and of repetitive patterns.

If we are what we do, awareness of the consequences of all our doings deserves to become the focus of education. For me, hide-and-go-seek is the best game ever because it engages creative minds and able bodies. And no matter how many times you play it (Have you given it up?), it remains a source of new situations. “Playing God” is probably the only game I would never recommend as an educational tool. The reason is very simple: Embodied in machines, games are, after all, machines themselves. As training tools (for children, workers, the military), they support improved performance, but not creativity. In fact, the challenge to move from the education characteristic of industrial society democracy to an education that partakes in the new dynamics of human existence can be reduced to a simple description: From reaction to anticipation.

A new science

Ours is an age of extensive experimentation and never-ending generation of data. This is the spirit of the time, and no one can avoid being subjected to numbers, which undergird whatever is affirmed (or contested, for that matter). Today’s education system enforces the expectation. Many debates concerning topics of extreme significance end with the rhetorical, “Given the data…” This is how we are conditioned; and this is what feeds the post-theory model of science. Accumulate data, process it, and chances are good that you will find order in the data, or some connections—better yet, correlations. And all these will lead to explanations. This meta- level inquiry—no longer examining what we actually want to know (e.g., how cancer emerges, how bees orient themselves, how we can replace burning oil with some other forms of energy production, how to have a good marriage) but their representations—is a captivating subject in itself. If we teach our students statistical analysis, would they navigate DNA analysis, free market oscillations, and deviant psychological behavior equally well? This question is not trivial and not to be lightly dismissed. The end of theory, as it was proclaimed, would mean that, based on data available at a scale never experienced—and expanding very fast—we would be in the position to act in the absence of knowledge. Given its many consequences, this is a paradoxical development impossible to ignore.

This is what Google does and what Yahoo fails to understand; Microsoft is not even close. Specifically: Without even trying to understand how markets work, what culture is, what design is, or what components of psychology go into advertising, Google decided to build tools to analyze data. The rest is something everyone knows, including the fact that Google’s analytical tools drive even on-line genetic testing (a business Google invested in), as well as political advertising (the 2008 Presidential campaign in the USA is only one example). Should we argue with success?

It would be inaccurate to describe my own research as ignoring experimental data, or even resisting experiment as a scientific method. What distinguishes my integrative method from the reductive method is that I try to bring together the quest for scientific law with the quest for scientific record (the ideographic—which establishes the individual entity as different from the others—and the nomothetic—the characteristics of a determined group of individual entities— as Windelband defined them). Therefore, proper data from a variety of experiments are always considered as I advance my own hypotheses. The data available, gathered over centuries by all kinds of researchers, served well the preoccupation with causation, which resulted in very solid scientific arguments. Among those who—obviously unknowingly—worked for me over centuries are linguists, logicians, mathematicians, physicists, chemists, engineers, as well as artists and writers, anthropologists, geologists, historians, and biologists. Among them are Newton, Descartes, Pascal, and, probably more than others, Leibniz and Aristotle. Charles Sanders Peirce is the mathematician and logician who finally brought what would become my questions into the focus of his logic of vagueness, which is the way he designed his semiotics. In more recent years, since my learning continues, I discovered Walter Elsasser. He could not receive the Nobel Prize, which everyone knew he deserved, because the label vitalism was attached to his attempt to set a strict foundation for biology. In this attempt, the inanimate physical world— his primary domain of expertise, since he was a physicist—and the living are defined in partial contradistinction. And I entered a dialog—abruptly ended upon his untimely death—with Robert Rosen, the only other researcher who was looking at phenomena in which not just the past, but also the future affect the present. Rosen and I were the first to project into scientific dialog the notion of anticipation. I also discovered Mandelbrot and Feigenbaum, distinguished mathematicians, and Lotfi Zadeh, who not only discovered fuzzy sets, but also founded possibility theory. All these details are yet additional sources of data. And yes, the analytical tools available today could easily help establish correlations among the various ideas and conceptions of scientists such as those I mentioned. Every record from experiments—be they brain imaging data, artificial intelligence performance data, cognitive science determinations, sociological and psychological experiments—can be integrated in the holistic models describing how anticipation is expressed.

The data I refer to consists not only of distinguished theories, but also of information collected in many experiments, in laboratory settings, and in the broad context of our life on Earth. I dare any scientist to generate the petabytes (i.e., one quadrillion bytes, the byte being the minimal unit of information in the computer), or for that matter, the exabytes (a petabyte multiplied by 1000) of data related to the emergence of language, of writing, of artificial languages, of genetic code as yet another language. The experimental method in science, which is at the core of science as practiced today, is based on the ability to generalize from what we, members of the human species, have acquired over our entire history. The world as a laboratory, and durations of thousands of years (relatively short if we think about the millions of years conjured in regard to the age of the planet)—this is a setting replaced by sampling, by short-lived experiments, by sequencing, by generalizations and abstractions. Every bit of data is nothing more than what we project into the measurement process. Let’s think about it. You weigh a stone, for example, and it turns out to be 563 grams. You project a stone into a brick wall, and if you throw it with enough force (i.e., muzzle speed, i.e., the speed at which a projectile leaves a gun’s muzzle, is high enough), the wall breaks. Let’s say that based on the thickness of the wall you need to expend 4376 joules of energy. This information, experimentally obtained, is useful for building cannons, Science leads to technological achievements. But the knowledge as such is based on the designated constructs: numbers, conventions for writing them, conventions for labeling them, conventions for performing operations (addition, subtraction, multiplication, etc.). These are all re-presentations. And this is what I look for as I try to understand why change takes place, and how it takes place.

Re-presentation

The act of re-presentation is at the same time an act of constitution, of making up the represented in a particular way. A poet can “weigh” the stone and come up with “Can God create a stone he cannot lift?” A blind person can “weigh” it and come up with “heavy” or “shiny” or “warm”. These are all re-presentations. The weight in grams, and the machine that allows us to attach to the stone some numbers, and a label related to the experiment of gravity (it will fall at a certain acceleration) are re-presentations that relate the stone to the laws of gravity (not to God’s omnipotence). In the poet’s re-presentation, the stone might never be lifted. In the musician’s re-presentation, it might associate with sounds reminiscent of the ocean. What I am saying here is that everything in our universe of existence can be perceived in infinite ways. Infinity—yet another construct—corresponds to the fact that the living, as an ever-changing entity, interacts with everything in many ways. The fact that all that exists is eventually reduced to a “summary” does not make the stone weigh 563 grams. This is a re-presentation from the perspective of a certain action we want to perform. The number attached to the stone’s weight makes a “stone” for the mind, and for other minds, and this re-presentation reduces the stone to its constructed weight. Change the context of measurement—e.g., weigh the stone on a space station—and you have constructed a new “stone.” Change the context of measurement—art, poetry, chemistry, war, geology, morphology—and the same stone is constructed in a variety of ways, all coherent within the activity that involves the stone. The drawing of the stone, the photograph, the film (over a short or longer time), the 3D image, the scanned stone, the wound that the stone might cause—they all make up different realities. Re-presentation is always a making up of what is represented, a constitution, in respect to an action, to our never-ending “We are what we do.” In writing a poem about a stone, we don’t need the number defining its weight within a certain convention. In treating the wound caused by a stone, the physician might try to find if the material making up the stone is poisonous or not.

Science, as practiced today, would like us to believe that a representation is neutral, inconsequential for what is represented. This fundamental attitude explains why understanding language always missed the essential aspect: Language does not come into being after what it represents, but in the act, in the process of re-presentation. Language is not a container for the reality it describes: sand, let’s say. It actually reports on our experience with something we encounter in a particular context (you step on it, or look at it). It actually re-makes this reality, re-shapes it. The energy that it takes to perform the function of evoking the experience is the energy of the act through which human beings make themselves, shape themselves, identify themselves as they encounter sand (much softer than pebbles, but also less stable). This is how “self-constitution” is defined. In this sense, language, and in a broader perspective everything that embodies expression—from the slight rolling of one’s eyes to the almost imperceptible moving of lips or nostrils, to the tremor in sounds, shifting of weight from one leg to the other, movement of forehead skin, etc., etc.—is not something we use (like a tool, a pencil, even a word), but something we are.

Interpretation

This view of language stems from semiotics. And semiotics, to be very clear about what it means, is the science dedicated to the study of signs and their interpretation. The definition requires an understanding of what is re-presented: the sign re-presents something else, be this an object in our surroundings, another person, a sensation, a dream, a word or many words making up a text, etc., in conjunction with an interpretation. There are no requirements characterizing an interpretation. It can be right or wrong, complete or incomplete, justified or arbitrary. Think about an apple depicted as the logo of the computer company in Cupertino, California, which some might associate with the fruit, with music (it used to be the logo of a British group), with the designer who produced the graphics, among many possible interpretations. It can be intentionally deviated by association with other signs (“Aww, apple” and a condescending hand gesture; or “Ah, Apple!” in a tone of admiration). “Nothing is a sign unless interpreted as a sign,” means the following: In each sign (gesture, words, sounds, texts, images, or combinations of these), there is a living experience intended or not for someone else. A scream is the expression of pain. If the receiver is the same as the emitter, the sign informs future actions. More often than we want to acknowledge, we talk to ourselves, give ourselves advice how to do something better, or how to avoid doing something harmful to ourselves. Whether the talking takes place in the “inner voice” or audibly, it carries the re-presentation of experience and forms a script for change. The life embedded in our expression is part of our life, as we do things. Therefore, “We are what we do” is the basis for endowing all our signs, including language, with life. The scream can call attention to oneself: “Help!” or “Run!” (danger is present). It can act as a sort of weapon, frightening away an animal, a person hiding in wait— whatever caused the pain. As a re-presentation, the sign embodies all the elements on whose basis our perceptions take place.

This understanding of language as congenial to our existence makes it easier to realize that the various sign systems documented to date are a record of our change over time, of what is called evolution. The physical world changes at a different rhythm than that of the living. The timescale of the physical is different from that of the living. The rhythm of the physical is relatively constant. The rhythm of the living is variable. Change in the physical world is the consequence of material processes, and is adequately represented within the descriptions of energy change. (The laws of thermodynamics are the descriptions that characterize such processes.) The shape of mountains doesn’t change overnight, or over years (unless there is an earthquake or a volcano erupts, or strip mining is being carried on); neither do oceans change from one instant to another. Some physical phenomena, all associated with energy differences, can take the form of extreme events. But the processes leading to them (volcano eruptions, earthquakes, hurricanes, tsunamis) are easily associated with broader patterns of change. Change in the physical world has no associated learning in itself.

Change in the living is predicated by several cycles: from conception to birth to death; cycles of interaction (with conception as part of it); cycles of activity (polishing a stone, making a hammer, agricultural cycles, industrial cycles, the speed at which a chip operates, cycles of creativity); cycles of evaluation (from the immediacy of results to longer and longer terms). Many other cycles can be added to this list since change in the living, at a certain level of awareness that the living acquires, starts being change of itself. The Darwinian model called evolution theory is based on data that document this change. Obviously, the timescale of existence of species and the timescale of their extinction refer to changes within the integrated world in which the physical appears as the stage for the unfolding of the living. In recent years, science documented impressive forms of interaction in the living realm. Some scientists would go so far as to speak of languages in reference to elephants, monkeys, bees, and fish. There is no reason to debate this here. Maybe it will help us to state that signals (for danger, reproduction readiness, food source) are part of the “vocabulary” of life, genetically encoded, relatively stable. Signs involve awareness, but at the same time are less precise than signals; that is, they are open to interpretations (maybe there is danger is not sufficient when survival cannot depend on a misleading re-presentation). Language, the most complex sign system so far, allows for levels of generality and abstraction that include our considerations regarding signals, and for that matter signs and language itself. The physical is not self-reflective. The living, as it creates its own existence, knows what similarity is, and what difference is, since in making itself, it perpetuates its existence (the similarity) against the background of its change through what it is actually doing. Our civilization perpetuates homo sapiens within a context in which the primitive human being could not survive. It mirrors today’s relatives, with individuals creating themselves as adapted to new conditions of life and work, and as able to pursue a sense of future. The physical is always a present. The past is its molding machine. The living, embodied as matter, cannot escape its physical reality. As a material embodiment, the living is molded by the past, as well. But the living is more than embodiment of matter. It is awareness of its own future.

On vitalism, causa finalis, the limits of the second law of thermodynamics, and other taboo subjects

If language were some neutral entity, something we receive and simply use as we use a coffee grinder, a pot, an oven, a car, a pair of scissors, a computer, or a book, we could learn all there is about reality without ever getting involved with reality. The statement, “A stone is heavy,” or “A stone is shiny,” or even “You can break a stone into smaller stones,” would suffice for someone, like a therapist, cook, or train engineer, who could not care less that there is so much more to the real stone than what language describes, most of the time very imprecisely. But language is not a neutral; it does not generate a passive description. There is experience in the word heavy: the distinctions between, light, heavy, and very heavy are distinctions among experiences, and therefore constitute a record of such experiences. There is history in the word stone, that is, the practical instances that eventually led to the arbitrary sequence of letters s, t, o, n, e to stand for a very large set of material entities that have in common their “stone-ness,” not “mud-ness” or “leaf-ness.”

Pre-sensing, which is something taking place before the senses receive specific information (such as seeing a bear), is the natural form of a perception of change: something (not specific) is approaching; something (not identified) will take place. It is related to what might be. It is clear that sensorial input occurs: notice broken tree branches, see paw-prints, smell excrement. But pre-sensing does not result in a definite representation. It is an act, more precisely, the desire to enlist all the senses: Give me more! It happens to all of us: an uneasiness going downstairs to a dark area, an alert as we encounter the unknown. Usually, after the experience, the language is confused: “I don’t know how to put it. It felt like a threat. I was anxious.” Self-constitution as in a posture of uneasiness, of defensive fear, of vigilance is the expression of the natural endowment, and probably an evolutionary characteristic. But the adventure is also some part of the being. The ability to perform well above our endowment when faced with real danger explains adventure. The unfolding of the present in the future can take place through unreflected actions, plans, language, predictions (based on past experience), and anticipations. In the animal realm, signals project a sense of immediacy. Signs transcend immediacy. In culture, signs constitute a shared background of understanding that extends from a past we might not even relate to directly, to the present, and, more important, to the future.

In the physical world, there is no time to relate to because there is no awareness of change. Change is its own record: watch the ocean waves move stones back and forth, a never-ending (it seems) polishing, of making sand from what used to be boulders. Only a film of the action, over a long time (centuries), would show the extent to which the physical and chemical processes involved change that particular aspect of reality. In the living, the change is what we call existence. Therefore, changes in language testify to changes in human condition, especially in the way we live, perform actions, and interact. Accumulated over the longest time, the answer to the question whether what differentiates the living from the physical is something of a special nature, was aggregated under the description living force (force vitale).

No one should be surprised that science debunked this notion. Aren’t the forces that relate to the falling of a stone the same as the forces involved in the falling of a cat? Or in our own falling? Strictly speaking, they are the same. But in addition to the forces defined within Newton’s mechanics, there are other forces at work that allow the cat to fall in ways that would prevent it from getting hurt; or forces that we deploy, almost never in awareness of them, to fall in ways that would prevent hurting ourselves. Aware of many such instances in which the living exhibits a different dynamics than the physical, some prominent physicists argued in favor of a “new physics.” Erwin Schrödinger (What is Life?) was one; Niels Bohr, another; Walter Elsasser yet another. They were all qualified—actually disqualified—as vitalistic. To deviate from the confines of the determinism underlying Descartes’ rationalism—inaugurating Western civilization—is to earn the title of vitalist, in our days synonymous with ignorance, or religion. It is rarely brought up that Descartes himself knew better—“One thinks metaphysically, but one lives and acts physically,” he wrote—ever since his reductionist-deterministic construct became the accepted dogma of science.

On the basis of this scientific foundation, to explain language is to perform the acrobatics of a description of nature meant to be understood as homogenous. The premise is seductively promising: machines and human beings are essentially similar; and when they are not, it is not the construct’s shortcoming that we need to address, but that of the being. Since Descartes, we have experienced the spectacular attempt to reach the highest efficiency by eliminating what does not fit into the deterministic scheme. For instance, the powerful sense of future that makes language not only a repository of past experiences, but also a medium of creation, for new experiences. Institutions of all kinds (church, state, universities, political parties, courts of justice, the military, regulatory boards) adopted determinism as the foundation of their permanence. They developed their languages for the purpose of processing the specific input (religion, education, politics, etc.) into a predictable output (congregants, citizens, alumni, etc.).

The understanding of language as implicit in the human being is the premise for understanding the necessary changes in the ways in which we constitute ourselves. The re- presentations (i.e., the signs, in their fascinating variety) replace the presented. The individual re-presented as his or her DNA is an example. Consequently, we enter a phase of civilization of generalized semiotization. For the geneticist, we are our DNA code; for the chemist, the substance from which each of us is made; for the doctor we consult, we are the battery of tests he will order and then analyze. For the vast networks of all kinds of computation in the new life sciences, we are only the living component. What better example than the massively distributed computer game called Ifold, deployed from a research center that studies the folding of proteins? So far, after huge investments in new technology and new analysis methods, folding of proteins, which explains important aspects of life, remains an unsolved problem. The hope now is that our playing a game allowing participants to fold proteins will generate the data to explain how they fold. Computationally, this is one of those problems labeled intractable, i.e., it cannot be solved using all available computation over a lifetime. Derived from the view advanced through the broad concept informing my specific hypotheses, the folding of proteins is guided by anticipation. Moreover, it seems that they “know” how they “want” to fold; that is, they go into a pre-determined direction. But this is causa finalis (as Aristotle defined it), yet another sin leading to excommunication from politically correct science. It is worth discussing the issue, even if only in a limited way.

The living is purposeful. This applies to all forms of life, from the simplest mono-cell to the human being. An observer of a seed can not avoid thinking that the seed has a purpose: it “wants” to become a plant. An observer of the falling cat cannot avoid thinking that the cat “knows” how to land in such a way as to avoid hurt. And an observer of some human performance (downhill skiing at high speed, returning a tennis serve, or simply using a hammer) could not avoid “seeing” purpose in successful performance. It appears as though the causa finalis, the future as a goal, is expressed in the action. All of science’s questions focusing on what and how point to a conception of cause and effect that is not only compatible with Descartes’ view and method, but also seems even to derive from it. The only question that explodes the system is “Why?” and this question points to final causes.

The living is characterized by purposiveness. Birds collecting twigs and grass for a nest, ants storing food for winter, and the human being conceiving a house have in common anticipation expressed as purpose.

There is more to account for. The physical changes in ways that fully confirm the well-known Second Law of Thermodynamics: The state of disorder in a physical entity increases. The living experiences change along two definitory lines. First, through learning (in the most general sense of the word), the living becomes more and more organized. This applies to the body and to the mind. Its cycle of change takes place in a variety of ways, and the “humanness” of the individual person is a record off decreased disorder. Patterns of human behavior and the associated language are an argument in favor of this major assertion. Second, as living matter, born to eventually die, its end is entropy. We notice that the Second Law of Thermodynamics is not cancelled; the macro-process of individual life ends in death, entropy. But life as such corresponds to a reduction of entropy. The end of life, i.e., return to physicality, brings with it higher entropy. The macro-process is complemented by a parallel, informational process of increased organization. Let me make it clear: elaborations, such as those mentioned (by Rosen, Elsasser, etc.) bring arguments different from mine in support of the hypothesis I described. Their arguments correspond to a different subject of inquiry.

Antecapere – When everything can be bought, why not anticipation?

The predictive power of Descartes’ Methode, or of Newton’s science, is fully documented by the impressive accomplishments of science and technology based on their contributions. The analytical power of reductionism and the solid deterministic foundation of science need no additional apologists. Rather, in order to continue the investigation in their spirit, we would be well advised to complement their acceptance through challenging some of the implicit limits of their science. Many scientists are active along these lines. The good news is that some scientists have discovered that in order to understand the living, they need to engage the living, to make it part of the machines they use, instead of making the living behave like a machine. As science advances, the simplistic understanding of causality has quite often been questioned. By way of example, if instead of understanding language, which is extremely complex, we start reducing it to components (alphabets, phonemes, words, vocabulary, syntax, grammar, etc.), we might gain some knowledge about such components. However, language disappears in the effort! The same holds true for space and time. Reduced to distance and interval, they cease to be the “stage” of existence. They become coordinates for describing change seen through a rather small window. The human being subjected to the same reductionist methodology often ends up as being seen as hands and feet and ears and eyes and heart and blood and neurons, about which we know so much that we even made them into the machines we know they are not. You can get artificial limbs; you can get a mechanical heart, you can get artificial ears, and even eyes. But can you get the humanness characteristic of the human being? This is not a rhetorical question. It begs the effort of understanding the being as a whole, whose parts interact, and even the fact that quite often, a failed function in the brain is compensated through a very sophisticated redistribution of resources (what is known as brain plasticity). The integrated nature of the living makes it impossible to follow the reductionist methodology in order to understand what defines life. Reductions can inform our understanding of the physical embodiment—and this is not to be scoffed at. It is a difficult endeavor, implying extremely sophisticated analytical tools and measurement procedures. In this vein, let’s notice an interesting situation: The physical cannot reach the dynamics of the living. In simpler terms, neither the stone, nor the most sophisticated computer is alive, in the sense of changing, adapting, and anticipating. They do not have the complexity at which self- change, adaptivity, and anticipation can take place. The reverse is not true: The living can behave like a machine; and some human beings even pursue the goal of machine-like behavior. The military of the past very much wanted to have warriors who were “fighting machines.” “Leave your intelligence at the entry to the garrison,” was repeated every so often to enlistees not able to execute an order without thinking about its meaning. The various institutions of any moment in time prefer machine-like behavior, and many make a good living in conditioning individuals to be machine-like performers. But even the most sophisticated technology, through which speculators in financial markets reach high performance (read: profits), could not save the public from the many crashes experienced in recent years.

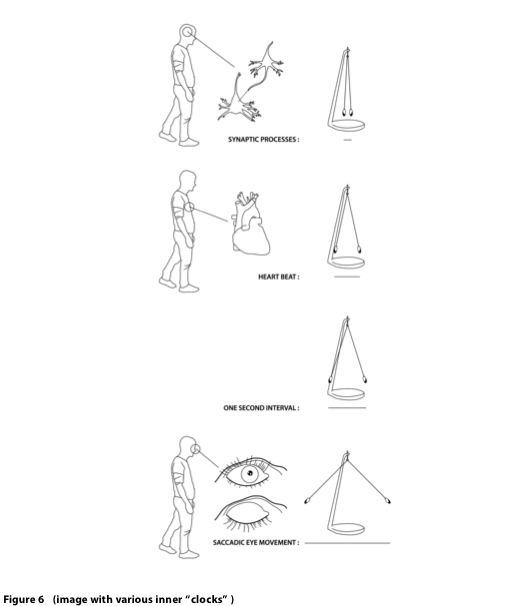

Change, as we have already seen, is variation over a time interval. The variety of timescales affects our ability to have reference for change. Language changes at a rhythm different from the one experienced by those who are the language—people constituting themselves in language. The past travels at the speed of our learning, of our experience. The future is much faster. It happens all the time that we do something—move an object, call someone on the phone, hammer a nail. In our mind, we have finished way ahead of the respective action. This is what anticipation is all about. The reductionist model reduces time to interval, i.e., how many ticks it takes on a clock between starting something and finishing something. But there is more to time than the interval. There exists a plurality of times, which explains the ability of the living to anticipate. Faster than real time—in the language of sound and video-recording—means a clock with a faster rhythm than that activated by gravity, or driven by some mechanism modeling a pendulum. The physical world is subject to duration. The living contains its own many clocks, ticking at different rhythms: the day and night cycle (and the associated behavior of plants, animals, and human beings); the heartbeat; the circadic rhythm, saccadic movements, among others. Synchronization of all these clocks is what maintains the coherence of life. As the physical substratum is affected by wear and tear, the clocks get out of sync; that is, anticipation diminishes, becomes less possible. Our own aging is most exemplary of this. We are subject to physical laws, as everything else in the world. The physics changes, our physical make-up weakens. However, anticipation is what makes the difference. If anticipatory performance is maintained, aging can be slowed down. Brain plasticity can be stimulated through a combination of physical and mental activities. This is the object of my Project Seneludens2 (begun in 2004).

This image illustrates some of the various “clocks” functioning in the human body. Anticipation is possible only because some processes are faster than others. Synaptic connections are at the foundation of mind processes (imagining something before we try to accomplish it). The heartbeat can accelerate before we are even aware of danger. Saccadic eye movement is well ahead of our perception of a change within the field of vision.

The way that the future affects the present can be suggestive: the “faster than real time” model generated by our minds is an informational reality. Let’s say you cook a meal. The entire action is informed by anticipations of texture, taste, shape, odor, aroma. Each ingredient is first mixed virtually, in the cook’s mind, and it “brings back” from the virtual “finished dish” information that informs the gesture of mixing, adding salt, adjusting the heat. Are we all equally good in conceiving and cooking? Are we all equally good in skiing, playing tennis, chess, writing, composing, inventing, dancing, etc.? No! Anticipation processes are not cause- free, but they are not deterministic. In a deterministic system, the same cause generates the same effect (under the same circumstances). A machine reproduces its performance for the duration of its functioning. In a non-deterministic system, the same cause can have different effects. It is not unusual to experience wrong anticipations. Great tennis players can return a fast serve against all odds, or can miss them, and even less than powerful serves. Among other reasons, this has to do with the nature of the information traveling from the “informational future” involved in anticipation to the present of the action.

There are many definitions of information. I mentioned that Shannon’s theory of information, which is the foundation of all activities in which information is important (from computer science to genetics) derives from the Second Law of Thermodynamics3. As far as the physical aspect of the living is concerned, this understanding of information is an appropriate construct. But the living is more than the physical, and for the specific part of the living where adaptive processes take place, where anticipation is at work, Shannon’s definition (of information as the result of organization) is no longer effective. Let us remember that some researchers studying the living defined information as pertinent to how form (we find the word form in the Latin origin of the word information) changes (cf. St Augustine). In this view, heredity, for instance, is not the flow of matter and energy (from the precursor), but of information. Abraham Moles defined information as novelty; and as we consider the succession of generations, we encounter not energy and disorder, but novelty.

Defining information boils down to two entities: a question (formulated explicitly or implicitly) and an answer. The wrong answer is zero information. Some answers are better than others. Therefore, information, like form, is a rather slippery entity, rarely as defined as we wish. The information carried from the future—the possible form—to the present guides actions, such as those described and many more. There are instances when our biological condition affects questioning of the future. Example: a pregnant woman has a better anticipatory behavior than other women. She knows better than a non-pregnant woman how to fall without hurting herself and (more important) the fetus, how to navigate the dangers intrinsic in the way she eats, drinks, sleeps, interacts with others. (There is much data proving this point, especially in the Nature journals.)

Interrogation of the future, which is how anticipation works, is actually the consideration of a set of possible answers. They are all weighted against each other. Deterministic processes go from simple inference characteristic of cause and effect (hit the nail with the hammer) to sequences of such actions. Non-deterministic processes do not return only one value: If you want to use a hammer, what kind of force do you have to apply? All you know is that you want the nail to make it through the wall so you can hang a picture on it. Can you infer from this goal how solid the wall is? How firm the nail? How heavy the hammer? As a reverse “computation,” anticipation is the animation of the entire action from the end to the beginning. Reverse computation can be explained as follows: Imagine you computed your budget: the amount of money spent for food, rent, utilities, medical care, insurances, travel, etc. Can you take the inverse path from final number in that budget in order to arrive at your earnings, or the taxes due on them? Answer: This problem cannot be solved through reverse computation.